Imagine this: You’ve just finished building an amazing machine learning model, ready to make its triumphant debut in the real world. But then you hit a wall – how do you even get your data prepped, processed, and fed into your model in a reliable, scalable way? This is where Airflow comes in, swooping in to save your data science project from logistical chaos.

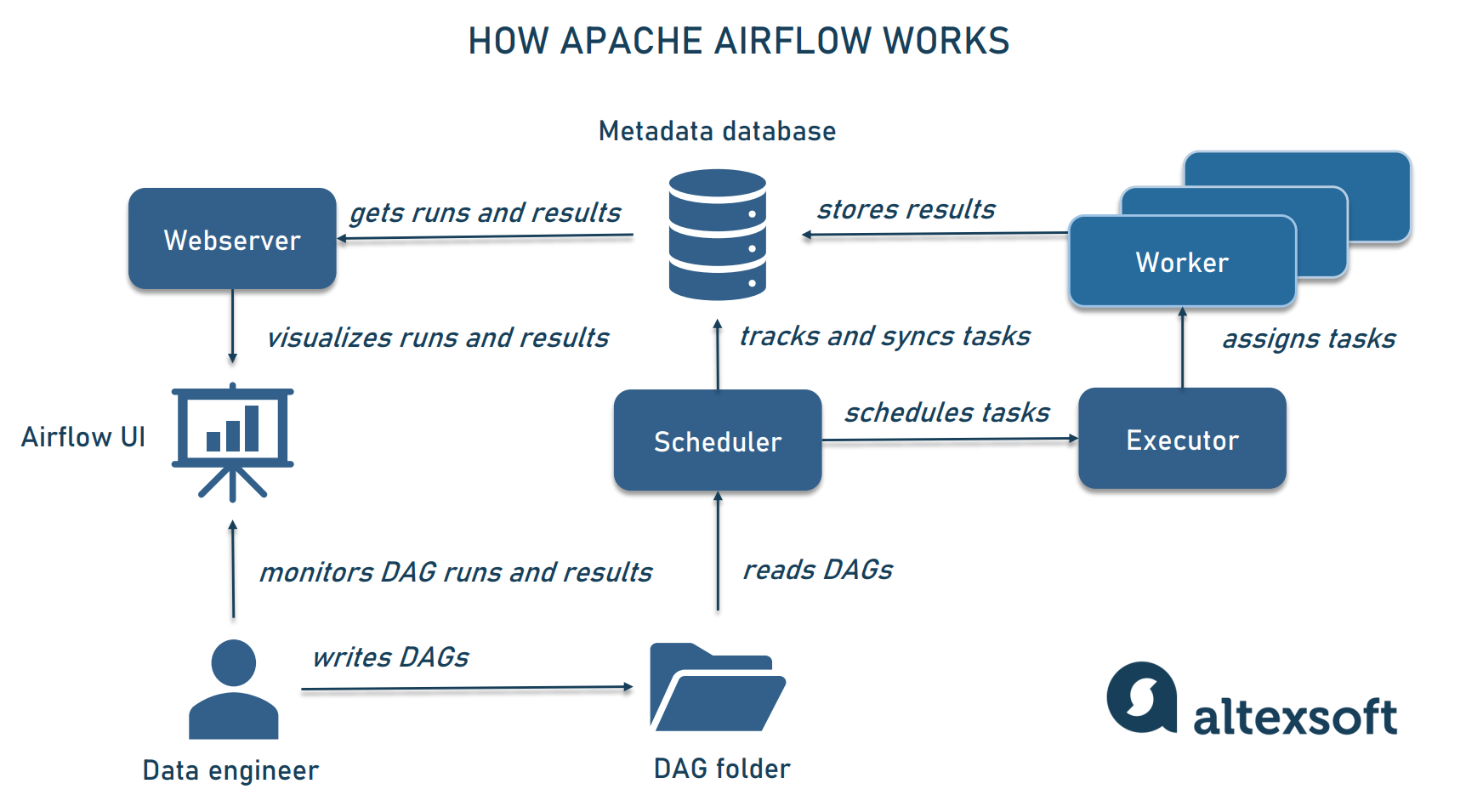

Image: www.altexsoft.com

Airflow, the open-source workflow management platform, is the unsung hero of many data science teams. It’s the glue that holds your data pipeline together, allowing you to orchestrate complex data workflows with ease. It’s not just about moving data; it’s about ensuring that your data pipeline runs smoothly, reliably, and on schedule, so you can focus on what you do best: creating groundbreaking models.

Airflow – The Powerhouse of Data Science Pipelines

Unraveling Airflow’s Magic

Airflow takes the hassle out of building and managing data pipelines by providing a clear and intuitive framework. You can define your data flows using Directed Acyclic Graphs (DAGs), which are like blueprints outlining the sequence and dependencies of your tasks. These DAGs are written in Python, making it easy for data scientists to integrate their code into the workflow.

Airflow is built for scalability. Whether you’re dealing with small datasets or massive amounts of data, Airflow can handle it all. It also allows you to easily monitor your data pipeline, identifying and addressing any bottlenecks or errors quickly. This means fewer sleepless nights worrying about your pipeline’s integrity and more time to focus on your data science projects.

Benefits of Airflow

Airflow empowers you in many ways. Here are some key advantages it offers for data science pipelines:

- Simplified Workflow Management: Airflow makes it easy to define and manage your data pipelines with DAGs, streamlining your entire workflow.

- Scalability and Reliability: Airflow handles massive datasets and ensures your pipeline runs smoothly, even in complex scenarios.

- Monitoring and Error Handling: Airflow’s built-in monitoring and error-handling functionalities give you complete control over your pipeline’s health.

- Flexibility and Integration: Airflow allows you to leverage a wide range of tools and technologies, including Python, Java, and various database and cloud storage solutions.

- Open-Source Power: Airflow’s open-source nature fosters a vibrant community with ample documentation, tutorials, and support.

Image: betterdatascience.com

Airflow in Action: Real-World Applications

Airflow shines in diverse scenarios, providing crucial support for various data science tasks:

- Feature Engineering and Preparation: Airflow can orchestrate the complex process of creating and transforming features for your machine learning models.

- Model Training and Evaluation: Airflow automates the entire training pipeline, including data ingestion, model building, and performance evaluation.

- Model Deployment and Monitoring: Airflow can deploy your trained models and continuously monitor their performance in production.

- Batch Processing and ETL: Airflow excels at managing large-scale batch processing jobs and Extract, Transform, and Load (ETL) operations.

- Data Visualization and Reporting: Airflow can generate data visualizations and reports automatically, providing valuable insights into your pipeline’s activity and performance.

Airflow – The Future of Data Science Pipelines

As data science continues to evolve, Airflow is poised to play an even more pivotal role in the field. The community is constantly innovating, adding features and improvements that address emerging challenges and enhance the data science workflow.

Key trends emerging in Airflow include:

- Cloud Integration: Airflow is becoming seamlessly integrated with major cloud providers like AWS, Azure, and Google Cloud, further simplifying deployment and scaling.

- Enhanced Security and Governance: With the increasing importance of data security, Airflow is incorporating robust security features and governance capabilities.

- Machine Learning Integration: Airflow is evolving to offer more sophisticated machine learning capabilities, enabling you to build and manage entire machine learning pipelines directly within Airflow.

Mastering Airflow: Tips for Success

Let’s look at some expert advice on utilizing Airflow effectively for your data science projects:

- Start with Simple DAGs: Begin with straightforward data pipelines to gain familiarity with Airflow’s syntax and functionality. As you get comfortable, you can gradually scale up to more complex pipelines.

- Leverage Templates and Plugins: Airflow’s community has developed a plethora of templates and plugins, which can significantly streamline your development process and provide reusable components for common data science tasks.

- Prioritize Monitoring and Logging: Establish effective monitoring and logging practices from the beginning. This allows you to quickly identify and address any issues in your pipeline and gain valuable insights into its performance.

- Document Your DAGs: Write comprehensive documentation for your DAGs, explaining the logic, dependencies, and expected outcomes. This will make your pipelines easier to understand and maintain, especially when working in collaboration with others.

- Embrace the Community: Engage with the vibrant Airflow community on forums, social media platforms, and the official documentation. Share your challenges, seek advice, and contribute to the community’s growth.

Airflow FAQs

Here are some frequently asked questions about Airflow:

Q: What programming languages can I use with Airflow?

A: Airflow’s core is written in Python, making it an ideal choice for data scientists who are familiar with Python. You can also execute tasks written in other languages like Java and Bash by using the appropriate operators and plugins.

Q: How can I deploy and manage Airflow in a production environment?

A: Airflow offers several deployment options, including running it on local machines, deploying it in the cloud using Kubernetes or Docker, or using managed services specific to platforms like AWS, Azure, or Google Cloud.

Q: What are some alternatives to Airflow for managing data pipelines?

A: While Airflow is a popular choice, other workflow management systems include Prefect, Luigi, and Dagster. Each of these platforms offers unique strengths and features, so it’s essential to choose the one that best suits your specific requirements and technical preferences.

Q: Is Airflow suitable for building real-time data pipelines?

A: Airflow is primarily designed for batch processing and scheduled tasks. While it can handle some real-time scenarios, alternatives like Kafka or Apache Spark may be more suitable for truly real-time data pipelines.

Airflow Data Science

Summary and Call to Action

Airflow is a powerful tool for managing data pipelines, offering scalability, reliability, and ease of use. It simplifies the workflow for data scientists, allowing them to focus on building innovative models and solutions.

Are you ready to unleash the power of Airflow in your data science projects? Start your journey today by exploring the Airflow documentation and experimenting with building your own data pipelines.

/GettyImages-173599369-58ad68f83df78c345b829dfc.jpg?w=740&resize=740,414&ssl=1)